You can find the exact locations of our capture servers for each venue from our list of venues.

Architecture

Architecture

Learn about Databento’s architecture and its implications on timestamping, data quality, and performance optimization.

Databento architecture

Databento architecture

Databento's infrastructure is designed with the following considerations:

- Redundancy and high availability. There are at least two redundant servers, connections, and network devices in every hop of the critical path between us and your application.

- Self-hosted. All our servers are self-hosted. We only rely on a cloud provider — AWS — for our public DNS servers, the CDN for our website, and independent monitoring of our service uptime.

- Low latency. Our live data architecture is optimized for low-latency use cases, such as algorithmic trading.

- High data quality. We employ several techniques to achieve lossless capture and highly accurate timestamping.

Live data architecture

Live data architecture

Our live data architecture is composed of the following elements:

- Colocation and direct connectivity to venue extranet

- Low latency switches and interconnects

- Lossless capture servers

- Zero-copy messaging

- Real-time data gateways

- SmartNIC-accelerated load balancing, firewall, and routing

- Direct Server Return (DSR) for load balancing

- Multiple enterprise IP transit providers

- Inline historical data capture and storage

Users connecting to us over a public network from within the same data center or metro area, using our Raw API and Databento Binary Encoding, can expect to receive data less than 1 millisecond after the venue disseminates the original data from their matching engine.

Colocation and direct connectivity to venue extranet

Colocation and direct connectivity to venue extranet

Our capture servers and real-time data gateways are generally colocated in the same primary data center as the venue's matching engine. We receive the data through a pair of direct connections (also called handoffs) to the venue's private network. This minimizes both the latency of our service and the variance of the network topology between us and the matching engine, yielding highly deterministic timestamps.

The venue will sometimes employ fiber equalization to ensure the same latency is achieved at handoffs across multiple data centers. In these cases, we may colocate our capture servers in any one of the data centers with equalized latency.

The only exception to primary data center colocation is when there are microstructural reasons for our trading users to prefer receiving the data elsewhere. These may include a central location for fragmented venues (like US equities), or proximity to a demarcation point for long-haul connectivity.

Info

Low latency switches and interconnects

Low latency switches and interconnects

Depending on the specific venue, the data takes one to three switch hops after the handoff to reach our capture server. One of those switch hops, at most, may be a cross-connect within the same data center. We use a mix of Layer 1 and 3 switches, with 4 nanosecond and sub-300 nanosecond port-to-port latencies, respectively.

ts_in_delta represents the time it takes (in nanoseconds) for a

data message to travel from the venue to our capture server's network card.

InfoYou can find the schemas that include the

ts_in_deltafield from our list of schemas.

Lossless capture servers

Lossless capture servers

Most venues disseminate their data over UDP multicast and provide a pair of multicast streams to offer protection against packet loss. Each of our capture servers receives both streams and compares packet sequence numbers to fill data gaps in the first stream. We call this packet arbitration or A/B arbitration.

We perform a second layer of packet arbitration between our capture servers to further reduce the risk of data loss. This is a proprietary part of our system that we call X/Y arbitration. As such, our setup receives at least four redundant streams of the data.

Most software-based capture processes are unable to sustain 100% line rate capture during the 10 to 40 gigabit bursts that we constantly encounter from the data feeds.

To address this, we offload the capture of the packets to an FPGA on each capture server, which reduces packet loss down to the order of one in a billion packets per stream. Combined with our packet arbitration process across four redundant streams, our process significantly mitigates the risk of data loss on the billions of packets that we receive daily on full venue feeds.

Zero-copy messaging

Zero-copy messaging

We employ a brokerless messaging pattern with a zero-copy internal messaging protocol to support our low latency, high throughput requirements. Once processed in our capture servers, the data only takes a single sub-300 nanosecond switch hop to our real-time data gateways.

Real-time data gateways

Real-time data gateways

The data is preprocessed in real time to support the various real-time customizations available on our live service. This includes parsing, normalization, and book construction. Depending on the venue, about 5 to 35 microseconds are spent in our capture server including through these preprocessing steps.

Our real-time data gateways are responsible for live data distribution. This includes negotiation of TCP sessions, inline data customization, transcoding, and handling of requests that vary from client to client. Our data spends about 5 microseconds in this layer before it takes another switch hop either to a customer's link (for customers connected over private cross-connect or peering) or to a network gateway that handles load balancing, firewall, and BGP routing (for customers connected over internet).

InfoWe also allow users to bypass our firewall with a private cross-connect or peering to achieve sub-41.3 microsecond latencies. Learn more from our Databento locations and connectivity options guide or contact us for details.

The time that the data terminates on the venue's handoff to the time that our

processed data leaves any of our real-time data gateways is provided in our data as

ts_out - ts_recv.

InfoReceiving

ts_outis enabled through a parameter at the authentication stage of the Raw API and in the constructors of the Live clients.

SmartNIC-accelerated load balancing, firewall, and routing

SmartNIC-accelerated load balancing, firewall, and routing

We employ DPDK-based, SmartNIC-accelerated network gateways that process packets up to 100 gigabit line rate with a median latency of 64 microseconds and 99th percentile latency of 311 microseconds.

Direct Server Return (DSR) for load balancing

Direct Server Return (DSR) for load balancing

In a traditional load balancing setup both incoming and outgoing traffic pass through a load balancer adding latency and another potential bottleneck for a high-volume streaming application. With DSR, incoming client traffic still passes through our load balancers, however now our client servers respond and push data directly to clients bypassing the load balancer hop altogether.

Multiple enterprise IP transit providers

Multiple enterprise IP transit providers

To maintain stable routes from public internet, we multiplex connections from multiple IP transit providers and private peering partners on our network gateways.

Inline historical data capture and storage

Inline historical data capture and storage

We capture our historical data on the same servers that normalize and disseminate our live data in real time. This ensures our historical data has the same timestamps as our live data.

Historical data is stored on a self-hosted, highly available storage cluster. To protect data integrity, our storage system has built-in detection and self-healing of silent bit rot.

The stored data is then made available through our historical API servers after a short delay, though licensing restrictions may limit when you can access this data. To ensure high uptime, our historical API servers are deployed as multiple instances in our cluster.

See alsoYou can read our docs on precision, accuracy, and resolution and timestamps on Databento and how to use them for more details about our data integrity and timestamping accuracy.

Timestamping

Timestamping

Precision, accuracy, and resolution

Precision, accuracy, and resolution

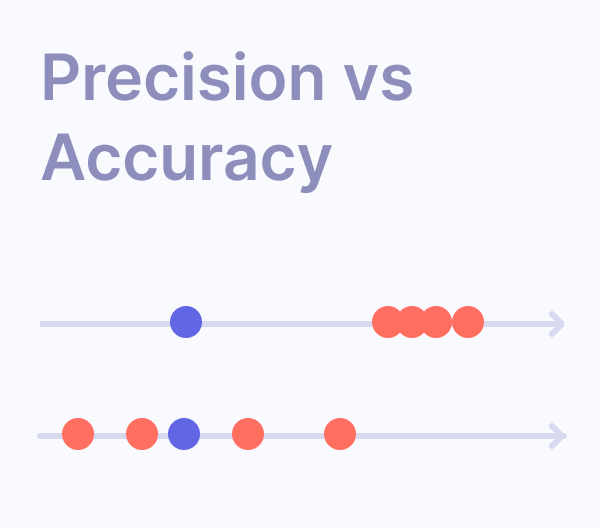

In our documentation, we quantify these terms as follows:

- Precision. The maximum deviation between timestamps delivered in the data and any reference time.

- Accuracy. The maximum deviation between timestamps delivered in the data and Coordinated Universal Time (UTC).

- Resolution. An arbitrary unit of time. The minimum increment of timestamps delivered in the data.

- Jitter. Closely related to precision. The maximum deviation of a clock's periodicity from its intended periodicity.

InfoUnfortunately, common speech uses the opposite sign convention. A time source with high precision or accuracy is said to have low maximum deviation. Likewise, high resolution refers to a small minimum timestamp increment. A clock with high jitter has low precision.

The following diagram helps to visualize these terms:

Nanosecond resolution timestamps

Nanosecond resolution timestamps

You may have seen other data sources that claim to provide nanosecond-resolution timestamps. But this is a meaningless claim on its own, as any device is capable of nanosecond-resolution timestamps! You can guess the current time and write a number arbitrarily down by hand to the nanosecond resolution - that doesn't mean that your body is capable of nanosecond-precision timestamping.

Likewise, you will find that most sources of financial data that carry nanosecond-resolution timestamps will use software-based timestamping that relies on CPU registers. There are a host of issues with this:

- The precision of CPU registers varies substantially with thermal conditions.

- The timestamping application is subject to sources of high jitter, such as interrupts, kernel scheduler events, context switching, and interference from other applications etc. This is especially the case with data providers that use cloud-based virtual machines to capture the data.

To safeguard against these issues, we offload our timestamping to hardware, for instance FPGA, which achieves more deterministic results. Depending on the techniques used, software timestamps may have a precision of anywhere from 100 nanoseconds to over 10 milliseconds. In contrast, our hardware timestamps achieve a precision from 4 to 10 nanoseconds (depending on the market).

Time synchronization with Precision Time Protocol

Time synchronization with Precision Time Protocol

You will also find that most sources of financial data rely on Network Time Protocol (NTP) to synchronize their clocks. In practice, software timestamps relying on NTP synchronization against public NTP servers can deviate from UTC up to the order of 100 milliseconds. Typical implementations of Precision Time Protocol (PTP) will achieve accuracy from sub-microsecond to somewhere under 50 microseconds.

At each data center, Databento uses a pair of GPS grandmaster clocks with PTP class 6 and clock accuracy under 25 ns against UTC as the time source. Our data servers achieve sub-microsecond synchronization to these GPS clocks.

Accuracy, precision, and resolution of Databento timestamps

Accuracy, precision, and resolution of Databento timestamps

Databento's data is intended to be suitable for high fidelity simulation,

including backtesting of passive and arbitrage strategies, order routing,

transaction cost analysis, and execution research. Our receive

timestamps (ts_recv) have the following properties:

- Precision. We use hardware timestamping to achieve a precision from 4 to 10 nanoseconds.

- Accuracy. We use PTP time synchronization against GPS receiver-based time sources that are backed up by rubidium atomic oscillators to ensure holdover accuracy. Our timestamps achieve an accuracy from under 100 nanoseconds to under 1 microsecond, depending on the capture server's location.

- Resolution. All of our timestamps are in nanosecond resolution.

Any exception to the above for a given dataset is stated in the detail page of the dataset. Such exceptions generally arise when we have to backfill data for other sources or use different hardware for a particular market.

There is no guarantee that timestamps embedded by original publisher

or market (ts_event, ts_in_delta) will have high accuracy, precision, or

resolution. Some markets still use millisecond resolution timestamps,

inherited from legacy FIX protocol. Many markets will use NTP time

synchronization and software timestamps. Often, our timestamps will

have even higher precision and accuracy than those provided by the market

itself.

Locations and network connectivity

Locations and network connectivity

Overview

Overview

The following table provides an overview of the various connectivity options supported by Databento and their recommended use cases.

| Connectivity option | Services | Best for | Ports | Latency (90th) |

|---|---|---|---|---|

| Internet | Live, Historical | Lowest cost | 0.5+ ms | |

| Dedicated: Interconnect with AWS, Google Cloud or Microsoft Azure | Live, Historical | Mission-critical, cloud-based applications requiring high uptime or consuming entire feed(s) | 1G | 1.7+ ms |

| Dedicated: Interconnect at proximity hosting location | Live, Historical | Mission-critical, self-hosted applications requiring high uptime or consuming entire feed(s) | 1G | 0.5+ ms |

| Dedicated: Cross-connect with any colocation or managed services provider (MSP) | Live | Applications requiring lowest latency | 10G, 25G | 42.4 μs |

| Dedicated: Colocation with Databento | Live | Applications requiring lowest latency | 10G, 25G | 42.4 μs |

| Peering | Live, Historical | Colocation, hosting and extranet providers, MSPs | 10G, 25G | 0.5+ ms |

Connecting via internet

Connecting via internet

By default, our client libraries will connect to our gateways over internet, using our public DNS hostnames.

Databento's services are designed to be efficient when consumed over internet. We use a mix of compression and normalization techniques to minimize bandwidth requirements of our data services, so that you can even consume full venue feeds—which would typically require a 10+ gigabit cross-connect—over a regular internet connection.

Hosting your application in close geographical proximity to our gateways is the first step you can take towards ensuring a fast and stable connection. To help you make that determination, here are the locations of our gateways as well as hostnames that you can run a ping or traceroute against:

| Service | Nearest metro | Data center | Address | Public DNS hostname |

|---|---|---|---|---|

| Historical (BO1) | Boston | CoreSite BO1 | 70 Inner Belt Rd, Somerville, MA | hist.databento.com |

| Live (DC3) | Chicago | CyrusOne Aurora I | 2905 Diehl Rd, Aurora, IL 60502 | dc3.databento.com |

| Live (NY4) | New York | Equinix NY4 | 755 Secaucus Rd, Secaucus, NJ 07094 | ny4.databento.com |

There is no strong guarantee that the shortest route between your application and our gateways will be taken even if they're situated in the same data center. To address this issue and achieve stable routes across the internet, Databento relies on blended bandwidth and private peering agreements which are summarized in the table below. If minimizing network variance is important for your use case, we recommend using any of these providers.

| Service | Data center | Transit providers and private peers |

|---|---|---|

| Historical (BO1) | CoreSite BO1 | Lumen, NTT, Telia, Cogent, Verizon, Google, Microsoft, Akamai |

| Live (DC3) | CyrusOne Aurora I | Lumen, Zayo |

| Live (NY4) | Equinix NY4 | Lumen, Hurricane Electric |

If your security policies only allow traffic from designated IPs, ensure that you can route to the entire range of Databento's public IP addresses, from 209.127.152.0 to 209.127.159.255 (or 209.127.152.0/21 in CIDR notation). We are allocated exclusive ownership and usage of these public IPs by ARIN.

You should also allow the following UDP and TCP port ranges used by our services through your access lists and firewalls. The destination ports are port numbers that Databento's servers listen on.

| Protocol | Destination ports |

|---|---|

| NTP | 123 |

| HTTPS (historical) | 443 |

| FTP | 21, 61000-61004 |

| UDP | 13000-13050 |

| TCP (live) | 13000-13050 |

Connecting via dedicated connectivity

Connecting via dedicated connectivity

See our dedicated connectivity guide for more details.

Connecting via peering

Connecting via peering

Databento supports private peering over BGP at CyrusOne Aurora and the Equinix NY2/4/5/6 facilities for our live data, and at Markley One Summer Street for historical data. Our BGP ASN is AS400138 and more info can be found on our PeeringDB profile. We do not support public peering at an IX at this time. Contact support to set up private peering with Databento.

Dedicated connectivity

Dedicated connectivity

Databento provides private, dedicated connectivity for customers who require predictable latency, high throughput, and higher uptime.

The following table provides an overview of the four dedicated connectivity options supported by Databento and the recommended use case of each.

| Connectivity option | Services | Best for | Ports | Latency (90th) |

|---|---|---|---|---|

| Interconnect with AWS, Google Cloud or Microsoft Azure | Live, Historical | Mission-critical, cloud-based applications requiring high uptime or consuming entire feed(s) | 1G | 1.7+ ms |

| Interconnect at proximity hosting location | Live, Historical | Mission-critical, self-hosted applications requiring high uptime or consuming entire feed(s) | 1G | 0.5+ ms |

| Cross-connect with any colocation or managed services provider (MSP) | Live | Applications requiring lowest latency | 10G, 25G | 42.4 μs |

| Colocation with Databento | Live | Applications requiring lowest latency | 10G, 25G | 42.4 μs |

Contact support if you need dedicated connectivity or any customized connectivity solution.

Interconnect with AWS, Google Cloud or Microsoft Azure

Interconnect with AWS, Google Cloud or Microsoft Azure

This is the recommended setup to achieve 1.5 to 7 ms latency with Databento and the most cost-effective option of our four dedicated connectivity solutions. A 1 Gb connection is provided here and adequate for consuming entire feeds.

This setup leverages our existing interconnections to AWS, Google Cloud, and Microsoft Azure on-ramps at Equinix CH1 and NY5. A dedicated layer 3 connection is installed and ensures that our data traffic goes through your cloud provider's backbone to reach your cloud services in any availability region or zone.

Note that connecting to the cloud over a dedicated interconnect may only achieve similar median latency as connecting over public internet, as Databento has a highly-optimized IP network with diverse routes across tier 1 ISPs. However, this solution is expected to achieve better tail latencies.

| Total NRC | Total MRC | Latency* (90th) |

|---|---|---|

| $300 | $750 | 1.7 ms, DC3 to Azure US N. Central. 6.8 ms, DC3 to GCP us-central1. |

* Varies with site. First byte of data in at our boundary switch to last byte read from your client socket.

Advantages

- Ease of setup, especially if your infrastructure is already AWS, Google Cloud, or Microsoft Azure.

- It avoids contention on public internet routes, ensuring stable tail latencies and sufficient bandwidth between you and our live data gateways.

- Simplicity—redundancy is built-in with BGP, which will fall back on our public internet routes if the dedicated connection is down, and once set up, you can connect to our gateways using our public DNS hostnames.

- You can use public cloud services, which are more cost-effective compared to conventional hosting and colocation services; it's cheaper to spin up redundant servers on public cloud.

- Your cloud servers may be situated in any availability region or zone to use this solution, giving you many flexible hosting options.

- Most cost-effective of our four dedicated connectivity options.

Disadvantages

- Most other financial services providers, e.g. your broker, trading venues, extranet providers, etc. only provide connectivity at certain points-of-presence (PoPs) and don't support connections to public cloud. If you need to connect to such other external services besides Databento, you'll most likely use us to arrange additional cross-connects and backhaul. If these services require much more than 1 Gb of bandwidth, it then becomes more cost-effective to pursue our other dedicated connectivity options.

- Higher latency than our other connectivity options.

Interconnect at proximity hosting location

Interconnect at proximity hosting location

This is similar to the setup for an Interconnect with AWS, Google Cloud or Microsoft Azure, except that we'll connect to your own infrastructure at a proximity hosting site instead of a public cloud site. At the moment, the following proximity hosting locations are supported:

- Equinix CH1/4, 350 E Cermak Ave.

- Equinix NY1, 165 Halsey St.

- Equinix NY2, 275 Hartz Way.

- Equinix NY9, 111 8th Ave.

- Equinix LD4, 2 Buckingham Ave, Slough, UK.

- Equinix FR2, Kruppstrasse 121-127, Frankfurt, Germany.

- LSE, 1 Earl St, London, UK.

- Interxion LON-1, 11 Hanbury St, London, UK.

| Total NRC | Total MRC | Latency* (90th) |

|---|---|---|

| $300 | $750+ | 0.59 ms, NY4 to NY1. 1.05 ms, DC3 to CH1. |

* Varies with site. First byte of data in at our boundary switch to last byte read from your client socket.

Cross-connect with any colocation or managed services provider (MSP)

Cross-connect with any colocation or managed services provider (MSP)

This is the minimum recommended setup to achieve sub-50 μs latency with Databento. We’re carrier-neutral and vendor-neutral—this means you may use any colocation provider or MSP that allows you to run a cross-connect to us at either CyrusOne Aurora I (DC3) or Equinix NY4/5. At the moment, our Raw API for live data only supports TCP transport and a single port is sufficient for reliable transmission with this setup.

Latency varies with the site and data feed. The estimate below is based on our slowest path, which includes the following hops:

- Arista 7050SX3-48YC, 800 ns

- Arista 7260X3, 450 ns

- Arista 7060SX2, 565 ns

- Mellanox Spectrum SN2410, 680 ns

| Total NRC | Total MRC | Latency* (90th) |

|---|---|---|

| $300 | $2,177.50 | 42.4 μs |

* First byte of data in at our boundary switch to last byte of data out onto your cross-connect.

Colocation with Databento

Colocation with Databento

InfoThis option is only available to customers with an existing annual, flat-rate subscription for Databento Live.

This is similar to cross-connecting with any colocation or MSP, and achieves similar latency. The main difference is that you're colocating with us directly.

| Total NRC | Total MRC | Latency* (90th) |

|---|---|---|

| $1,500 | $2,048.75 | 42.4 μs |

* First byte of data in at our boundary switch to last byte of data out on your data port.

Advantages

- Allows us to provide more end-to-end support and debug a wider range of issues.

- Provides you with predictable latency that matches our test bench.

- No cross-connect to Databento required. You're in the same rack as our live gateway and this skips hops on your colocation provider or MSP's edge border leaf or spine switches.

Disadvantages

- Not suitable if you anticipate a complex environment with more than 3 cross-connects, you have frequently-changing requirements, or you need a diverse selection of on-net counterparties and direct venue connectivity.

Databento NTP service

Databento NTP service

Network Time Protocol (NTP) is an internet protocol commonly used to synchronize time between computer systems over a network. The Databento NTP service is a free public time service, based on NTP, that's available to everyone over internet and public cloud.

Who should use this

Who should use this

Databento's NTP service is ideal for financial users using proximity hosting services and public cloud providers like AWS, Azure, and GCP, and for users whose servers are located in the Chicago, New York, and New Jersey metro regions. While more accurate time synchronization practices exist for these users, the next marginal improvement for these users over our NTP service will require dedicated hosting or cross-connects, which are significantly more costly.

All live data users connecting to Databento over internet are strongly recommended to use this time service to ensure that their local system clock is synchronized against Databento's internal time source and that latency estimates taken between local timestamps and Databento timestamps are more accurate.

Getting started

Getting started

Anyone can synchronize their clocks against our time service by pointing their NTP client at ntp.databento.com.

Alternatively, you can pick specific servers from this pool, as follows:

| Server | Data center | Location |

|---|---|---|

ntp-01.dc3.databento.com |

CyrusOne Aurora I | 2905 Diehl Rd, Aurora, IL 60502 |

ntp-01.ny4.databento.com |

Equinix NY4 | 755 Secaucus Rd, Secaucus, NJ 07094 |

ntp-02.ny4.databento.com |

Equinix NY4 | 755 Secaucus Rd, Secaucus, NJ 07094 |

Recommended configuration

Recommended configuration

Here are some recommendations for obtaining good accuracy on your system clock against our NTP service:

- Use a high quality NTP client implementation, such as chrony.

- In most cases, you should only step the clock in the first few updates, before your

application starts. You can configure this with the

makestepdirective in your chrony configuration:

makestep 1 3

- Ensure your system clock is only adjusted by slewing while your application is running. This means that your clock is adjusted by slowing down or speeding up instead of stepping, which ensures monotonic timestamps. By default, chrony adjusts the clock by slewing.

- However, if your system does not have a real-time clock or is a virtual machine which can be suspended and resumed with an incorrect time, it may be necessary to allow stepping on every clock update, as follows:

makestep 1 -1

In summary, you can add the following lines to your chrony configuration file,

found at /etc/chrony.conf or /etc/chrony/chrony.conf:

pool ntp.databento.com iburst minpoll 4 maxpoll 4

# Step the system clock instead of slewing it if the adjustment is larger than

# one second, but only in the first three clock updates

makestep 1 3

You can check that chrony is now using your new NTP sources with the chronyc sources

command.

Architecture - How it works

Architecture - How it works

Since the margin of error in time synchronization is largely a function of distance and hops to the time source, Databento's NTP service leverages our network of colocated servers at various financial data centers to help users obtain more accurate time.

The Databento NTP service is composed of a pool of stratum 1 NTP servers at the CyrusOne Aurora I and Equinix NY4 data centers. In contrast, common time providers usually situate their time servers in general-purpose data centers that are further from popular colocation and proximity hosting sites used by financial firms.

Another important reason to use Databento's time service is the nature of its clock source. The time servers for our service are synchronized against the same PTP time source used for supplemental receive and outbound timestamps in our data, thus our time servers have a margin of error under 200 nanoseconds:

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

#* PTP 0 0 377 1 +9ns[ +11ns] +/- 159ns

In turn, our PTP source is based on a pair of grandmaster clocks with under 25 nanosecond root-mean-squared error against UTC. Other public NTP services do not provide any guarantees about the quality of their clock sources—even if you were to obtain a time sync to their NTP servers with sub-millisecond tolerance, you may still be off from UTC by tens of milliseconds.

Performance optimization

Performance optimization

Locations

Locations

What’s DC3?

DC3 refers to the legacy name of the CME data center and the primary colocation site for its matching engine, Aurora DC3, before it was acquired by CyrusOne and renamed Aurora I. Since we have some data predating this acquisition, we still use this site name for naming consistency.

Where are DC3, NY4, and NY5 located?

DC3 is located at 2905 Diehl Road, Aurora, IL 60502. NY4 and NY5 are located at 755 and 800 Secaucus Rd, Secaucus NJ 07094 respectively.

Setup

Setup

Do I need to install or license any special software to make use of dedicated connectivity?

No, you do not require any special software. Databento’s Raw API and official Python, C++ and Rust client libraries support dedicated connectivity. Our live data offering is a managed service, meaning you don’t have to run our software as a process on your machine.

Do I need any network configuration to make use of dedicated connectivity?

We’ll assist you in configuring network routes to our gateways depending on the option that you pick. For cross-connects and colocation, you’ll pass in a private IP to take a dedicated route. For dedicated interconnects, you’ll rely on BGP to select the shortest path.

Performance

Performance

Does latency matter for my use case? If so, is Databento fast enough?

Most electronic trading participants do not see an appreciable benefit from going below 50 us or even 1 ms, so our dedicated connectivity options and normalized feed performance should be more than adequate for most of our users.

If you need even lower latency, there are specialized solutions tailored to your use case. However, to our knowledge, the next fastest solution will cost at least thrice the cost. This is intentional: Databento is designed to be at the pareto-frontier between latency and cost, i.e. each of our dedicated connectivity options is the fastest commercially-available solution at its price point.

Being on the sweet spot between latency and pricing puts us in a position to solve many issues. For instance, Databento is great for initial exploration in cases where you have a catch-22 of being unable to quantify if you need lower latency until you start trading. Also, through us, users with cloud-based infrastructure can achieve comparable performance to colocated, bare metal infrastructure at a fraction of the cost.

Why does Databento Live use TCP for transport? Don’t trading venues use UDP for raw feeds? Isn’t TCP slower than UDP?

At this time, we’re only supporting TCP so that all of our customers get reliable transmission and congestion control without additional complexity in the client implementation.

On a side note, TCP is potentially faster than UDP for customers who’re connecting to us over long-haul, and we’ve made considerable efforts to optimize our Raw API and measure its performance.

Raw financial feeds often use UDP multicast because of its simplicity and potential for latency optimization on networks with excess bandwidth, as in the case when you have a direct connection to the trading venue’s extranet. However, TCP is more efficient in environments with limited bandwidth, network congestion, and a large proportion of small messages—because the TCP protocol allows for buffering of the data messages to fill a full network segment. In fact, some popular ECNs and OTC trading venues have to support customers connecting over the internet and have TCP-based feeds as their primary, raw data feed.

Do you lease out server hardware to your customers?

We only lease out hardware to customers who colocate with us directly.

Hardware

Hardware

Do you offer VMs or virtual private servers (VPSes)?

We don’t currently offer VMs or VPSes.

Do you have a recommended server spec?

Databento has very low minimum system requirements, so the hardware specification will depend more on your application and we do not have any hard recommendations. Most of our customers have servers with a dedicated NIC from AMD, Mellanox, or Intel.

Fees and terms

Fees and terms

Do you charge all of the fees listed, including for services not provided directly by Databento?

We only charge the fees with Databento listed in the “Paid to” column. Other listed fees are estimates and payable to the provider.

What’s the term length for any of these dedicated connectivity options?

We require a 12-month contract.